Regression and Classification

One of Data Science key purpose is to model and predict trends. With the Ames housing dataset, regression modelling and classification are able to utilise.

Regression and Classification with the Ames Housing Data

You have just joined a new “full stack” real estate company in Ames, Iowa. The strategy of the firm is two-fold:

- Own the entire process from the purchase of the land all the way to sale of the house, and anything in between.

- Use statistical analysis to optimize investment and maximize return.

The company is still small, and though investment is substantial the short-term goals of the company are more oriented towards purchasing existing houses and flipping them as opposed to constructing entirely new houses. That being said, the company has access to a large construction workforce operating at rock-bottom prices.

This project uses the Ames housing data recently made available on kaggle.

import numpy as np

import scipy.stats as stats

import seaborn as sns

import matplotlib.pyplot as plt

import pandas as pd

import patsy

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LassoCV

from math import log, exp, sqrt

sns.set_style('whitegrid')

%config InlineBackend.figure_format = 'retina'

%matplotlib inline

1. Estimating the value of homes from fixed characteristics.

Your superiors have outlined this year’s strategy for the company:

- Develop an algorithm to reliably estimate the value of residential houses based on fixed characteristics.

- Identify characteristics of houses that the company can cost-effectively change/renovate with their construction team.

- Evaluate the mean dollar value of different renovations.

Then we can use that to buy houses that are likely to sell for more than the cost of the purchase plus renovations.

Your first job is to tackle #1. You have a dataset of housing sale data with a huge amount of features identifying different aspects of the house. The full description of the data features can be found in a separate file:

housing.csv

data_description.txt

You need to build a reliable estimator for the price of the house given characteristics of the house that cannot be renovated. Some examples include:

- The neighborhood

- Square feet

- Bedrooms, bathrooms

- Basement and garage space

and many more.

Some examples of things that ARE renovate-able:

- Roof and exterior features

- “Quality” metrics, such as kitchen quality

- “Condition” metrics, such as condition of garage

- Heating and electrical components

and generally anything you deem can be modified without having to undergo major construction on the house.

Your goals:

- Perform any cleaning, feature engineering, and EDA you deem necessary.

- Be sure to remove any houses that are not residential from the dataset.

- Identify fixed features that can predict price.

- Train a model on pre-2010 data and evaluate its performance on the 2010 houses.

- Characterize your model. How well does it perform? What are the best estimates of price?

Note: The EDA and feature engineering component to this project is not trivial! Be sure to always think critically and creatively. Justify your actions! Use the data description file!

# Load the data

house = pd.read_csv('./housing.csv')

# Load data text

file = open('data_description.txt','r')

# print file.read()

1.1 Check shape, head and info of house data

shape = house.shape

shape

(1460, 81)

# inspect head by ensuring that all columns are displayed

pd.options.display.max_columns = 100

pd.options.display.max_rows = 100

house.head()

house.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1460 entries, 0 to 1459

Data columns (total 81 columns):

Id 1460 non-null int64

MSSubClass 1460 non-null int64

MSZoning 1460 non-null object

LotFrontage 1201 non-null float64

LotArea 1460 non-null int64

Street 1460 non-null object

Alley 91 non-null object

LotShape 1460 non-null object

LandContour 1460 non-null object

Utilities 1460 non-null object

LotConfig 1460 non-null object

LandSlope 1460 non-null object

Neighborhood 1460 non-null object

Condition1 1460 non-null object

Condition2 1460 non-null object

BldgType 1460 non-null object

HouseStyle 1460 non-null object

OverallQual 1460 non-null int64

OverallCond 1460 non-null int64

YearBuilt 1460 non-null int64

YearRemodAdd 1460 non-null int64

RoofStyle 1460 non-null object

RoofMatl 1460 non-null object

Exterior1st 1460 non-null object

Exterior2nd 1460 non-null object

MasVnrType 1452 non-null object

MasVnrArea 1452 non-null float64

ExterQual 1460 non-null object

ExterCond 1460 non-null object

Foundation 1460 non-null object

BsmtQual 1423 non-null object

BsmtCond 1423 non-null object

BsmtExposure 1422 non-null object

BsmtFinType1 1423 non-null object

BsmtFinSF1 1460 non-null int64

BsmtFinType2 1422 non-null object

BsmtFinSF2 1460 non-null int64

BsmtUnfSF 1460 non-null int64

TotalBsmtSF 1460 non-null int64

Heating 1460 non-null object

HeatingQC 1460 non-null object

CentralAir 1460 non-null object

Electrical 1459 non-null object

1stFlrSF 1460 non-null int64

2ndFlrSF 1460 non-null int64

LowQualFinSF 1460 non-null int64

GrLivArea 1460 non-null int64

BsmtFullBath 1460 non-null int64

BsmtHalfBath 1460 non-null int64

FullBath 1460 non-null int64

HalfBath 1460 non-null int64

BedroomAbvGr 1460 non-null int64

KitchenAbvGr 1460 non-null int64

KitchenQual 1460 non-null object

TotRmsAbvGrd 1460 non-null int64

Functional 1460 non-null object

Fireplaces 1460 non-null int64

FireplaceQu 770 non-null object

GarageType 1379 non-null object

GarageYrBlt 1379 non-null float64

GarageFinish 1379 non-null object

GarageCars 1460 non-null int64

GarageArea 1460 non-null int64

GarageQual 1379 non-null object

GarageCond 1379 non-null object

PavedDrive 1460 non-null object

WoodDeckSF 1460 non-null int64

OpenPorchSF 1460 non-null int64

EnclosedPorch 1460 non-null int64

3SsnPorch 1460 non-null int64

ScreenPorch 1460 non-null int64

PoolArea 1460 non-null int64

PoolQC 7 non-null object

Fence 281 non-null object

MiscFeature 54 non-null object

MiscVal 1460 non-null int64

MoSold 1460 non-null int64

YrSold 1460 non-null int64

SaleType 1460 non-null object

SaleCondition 1460 non-null object

SalePrice 1460 non-null int64

dtypes: float64(3), int64(35), object(43)

memory usage: 924.0+ KB

house.head()

# Understand the unique values of each columns

house.nunique().sort_values()

CentralAir 2

Utilities 2

Street 2

Alley 2

BsmtHalfBath 3

LandSlope 3

GarageFinish 3

HalfBath 3

PavedDrive 3

PoolQC 3

FullBath 4

MasVnrType 4

BsmtExposure 4

ExterQual 4

MiscFeature 4

BsmtFullBath 4

Fence 4

KitchenQual 4

BsmtCond 4

Fireplaces 4

LandContour 4

LotShape 4

KitchenAbvGr 4

BsmtQual 4

FireplaceQu 5

Electrical 5

YrSold 5

GarageCars 5

GarageQual 5

GarageCond 5

HeatingQC 5

ExterCond 5

MSZoning 5

LotConfig 5

BldgType 5

BsmtFinType2 6

Foundation 6

RoofStyle 6

SaleCondition 6

GarageType 6

BsmtFinType1 6

Heating 6

Functional 7

RoofMatl 8

HouseStyle 8

Condition2 8

PoolArea 8

BedroomAbvGr 8

SaleType 9

Condition1 9

OverallCond 9

OverallQual 10

TotRmsAbvGrd 12

MoSold 12

Exterior1st 15

MSSubClass 15

Exterior2nd 16

3SsnPorch 20

MiscVal 21

LowQualFinSF 24

Neighborhood 25

YearRemodAdd 61

ScreenPorch 76

GarageYrBlt 97

LotFrontage 110

YearBuilt 112

EnclosedPorch 120

BsmtFinSF2 144

OpenPorchSF 202

WoodDeckSF 274

MasVnrArea 327

2ndFlrSF 417

GarageArea 441

BsmtFinSF1 637

SalePrice 663

TotalBsmtSF 721

1stFlrSF 753

BsmtUnfSF 780

GrLivArea 861

LotArea 1073

Id 1460

dtype: int64

1.2 Remove rows where MSZoning is non-residential i.e. A, C or I

#Understand the different zoning in the dataset

house['MSZoning'].value_counts()

# The dataset includes commercial properties which is not necessary

RL 1151

RM 218

FV 65

RH 16

C (all) 10

Name: MSZoning, dtype: int64

# Remove non-residential

house = house[house.MSZoning != 'C (all)']

house.shape

# note: we lost 10 rows.

(1450, 81)

1.3 Inspect null values. Drop ID & columns with more than 40% null values

house.isnull().sum().sort_values(ascending= False).head(10)

# PoolQC, MiscFeature, Alley, Fence,

#FireplaceQu & LotFrontage have a lot of null values

PoolQC 1453

MiscFeature 1406

Alley 1369

Fence 1179

FireplaceQu 690

LotFrontage 259

GarageCond 81

GarageType 81

GarageYrBlt 81

GarageFinish 81

dtype: int64

#drop irrelavant columns for modelling

house.drop('Id', axis=1, inplace=True)

#look for columns with more than 40% null values

null_cols = house.columns[house.isnull().sum()> len(house)*0.4]

null_cols

Index(['Alley', 'FireplaceQu', 'PoolQC', 'Fence', 'MiscFeature'], dtype='object')

#drop columns with more than 40% null values

house.drop(null_cols, axis=1, inplace=True)

1.4 Assess the remainding null values

house.isnull().sum().sort_values(ascending= False).head(10)

LotFrontage 259

GarageType 81

GarageYrBlt 81

GarageCond 81

GarageQual 81

GarageFinish 81

BsmtExposure 38

BsmtFinType2 38

BsmtFinType1 37

BsmtCond 37

dtype: int64

Since the data cannot be further reduce as it would be too short, we need to fill in the null values

1.5 Fill in null values

isnull = pd.DataFrame(house.isnull().sum(), columns=['null_values']).reset_index()

isnull = isnull[isnull['null_values'] != 0]

isnull

1.6 Fill numerical data with median & categorical data with median or mode values

for col in isnull['index']:

try:

house[col].fillna(house[col].median(), inplace= True)

except:

house[col].fillna(house[col].mode()[0], inplace=True)

#Check if all the null values are being filled up

house.isnull().sum()

MSSubClass 0

MSZoning 0

LotFrontage 0

LotArea 0

Street 0

LotShape 0

LandContour 0

Utilities 0

LotConfig 0

LandSlope 0

Neighborhood 0

Condition1 0

Condition2 0

BldgType 0

HouseStyle 0

OverallQual 0

OverallCond 0

YearBuilt 0

YearRemodAdd 0

RoofStyle 0

RoofMatl 0

Exterior1st 0

Exterior2nd 0

MasVnrType 0

MasVnrArea 0

ExterQual 0

ExterCond 0

Foundation 0

BsmtQual 0

BsmtCond 0

BsmtExposure 0

BsmtFinType1 0

BsmtFinSF1 0

BsmtFinType2 0

BsmtFinSF2 0

BsmtUnfSF 0

TotalBsmtSF 0

Heating 0

HeatingQC 0

CentralAir 0

Electrical 0

1stFlrSF 0

2ndFlrSF 0

LowQualFinSF 0

GrLivArea 0

BsmtFullBath 0

BsmtHalfBath 0

FullBath 0

HalfBath 0

BedroomAbvGr 0

KitchenAbvGr 0

KitchenQual 0

TotRmsAbvGrd 0

Functional 0

Fireplaces 0

GarageType 0

GarageYrBlt 0

GarageFinish 0

GarageCars 0

GarageArea 0

GarageQual 0

GarageCond 0

PavedDrive 0

WoodDeckSF 0

OpenPorchSF 0

EnclosedPorch 0

3SsnPorch 0

ScreenPorch 0

PoolArea 0

MiscVal 0

MoSold 0

YrSold 0

SaleType 0

SaleCondition 0

SalePrice 0

dtype: int64

1.7 Rename columns names with numbers as first character to facilitate patsy later on

number = {0:'zero', 1: 'one', 2:'two', 3: 'three', 4: 'four', 5: 'five', 6: 'six', 7:'seven', 8: 'eight', 9: 'nine'}

new_col_names= []

for col in house.columns:

if col[0] in [str(x) for x in range(10)]:

new_col = number[float(col[0])] + '_' + col[1:]

new_col_names.append(new_col)

else:

new_col_names.append(col)

house.columns = new_col_names

1.8 Find and handle outliers

house.describe(include='all')

# Found that houses with 'LotArea' < or > 6 std away from mean

threshold = 6 * house['LotArea'].std()

lotarea_mask = abs( house['LotArea'] - house['LotArea'].mean() ) > threshold

house[lotarea_mask]

# create new column for big houses

house['big_house'] = 0

house.loc[lotarea_mask, 'big_house'] = 1

house['big_house'].value_counts()

0 1455

1 5

Name: big_house, dtype: int64

1.9 Select relevant columns for analysis

""""Build a reliable estimator for the price of the house given characteristics of the house that

CANNOT be renovated"""

# Remove non-fixed variables such as Exterior1st, Exterior2nd, ExterQual,ExterCond,BsmtQual, BsmtCond,

# BsmtFinType1, BsmtFinType2, HeatingQC etc

# 'CentralAir' and 'Heating' cannot be changed

# 'BsmtExposure' will be a fixed variable as the exposure cannot be changed

# Target= SalePrice

non_fixed = ['OverallQual','OverallCond','YearRemodAdd','RoofStyle','RoofMatl','Exterior1st','Exterior2nd',

'MasVnrType','MasVnrArea','ExterQual','ExterCond','BsmtQual','BsmtCond','BsmtFinType1','BsmtFinSF1',

'BsmtFinType2','BsmtFinSF2','BsmtUnfSF','HeatingQC','Electrical','LowQualFinSF','KitchenQual',

'Functional','GarageFinish','GarageQual','GarageCond','PavedDrive','MiscVal','SaleType','SaleCondition',

'MoSold']

house_new = house.drop(non_fixed, axis=1)

#Checking the shape of the data

house_new.shape

(1460, 45)

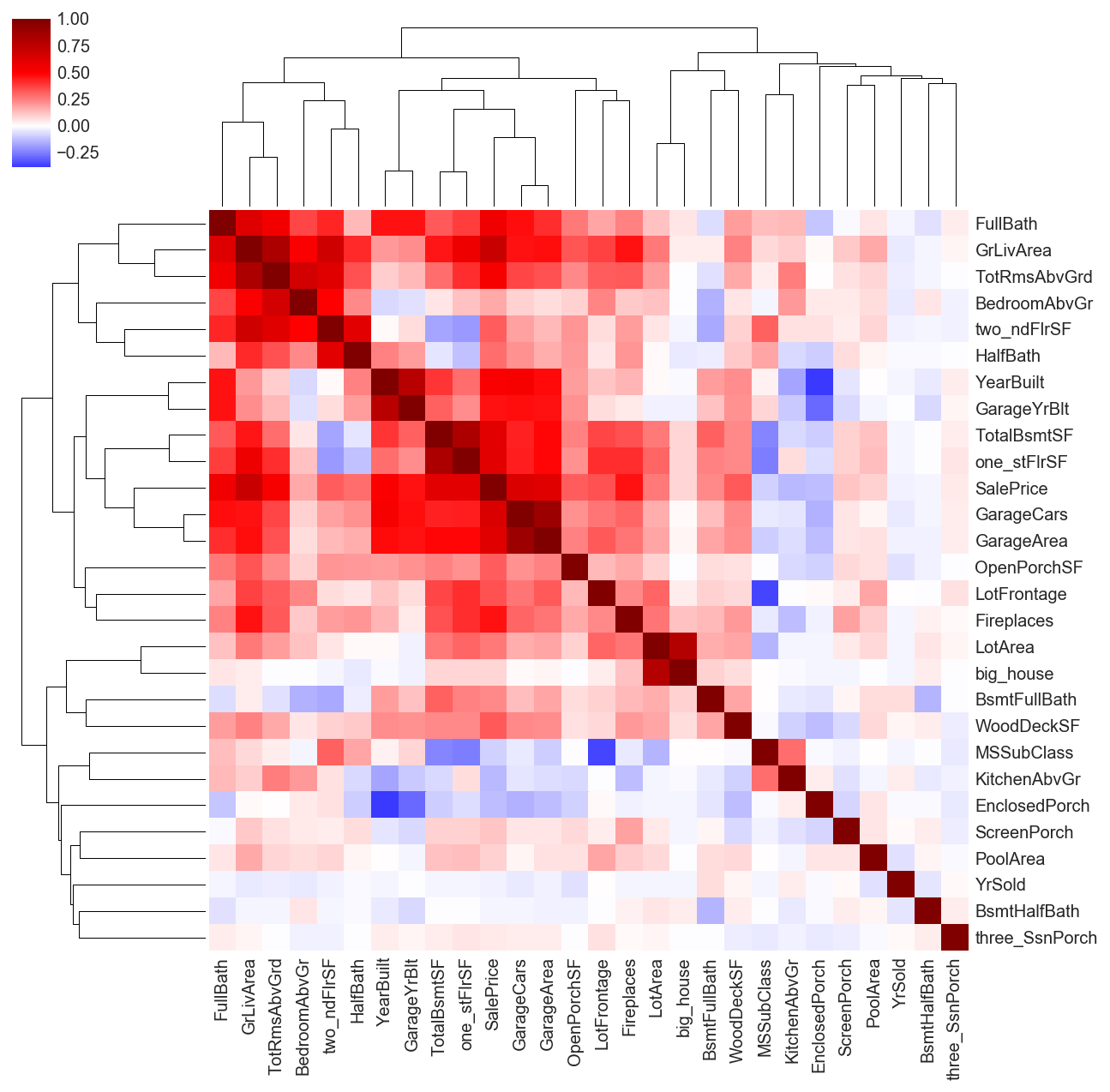

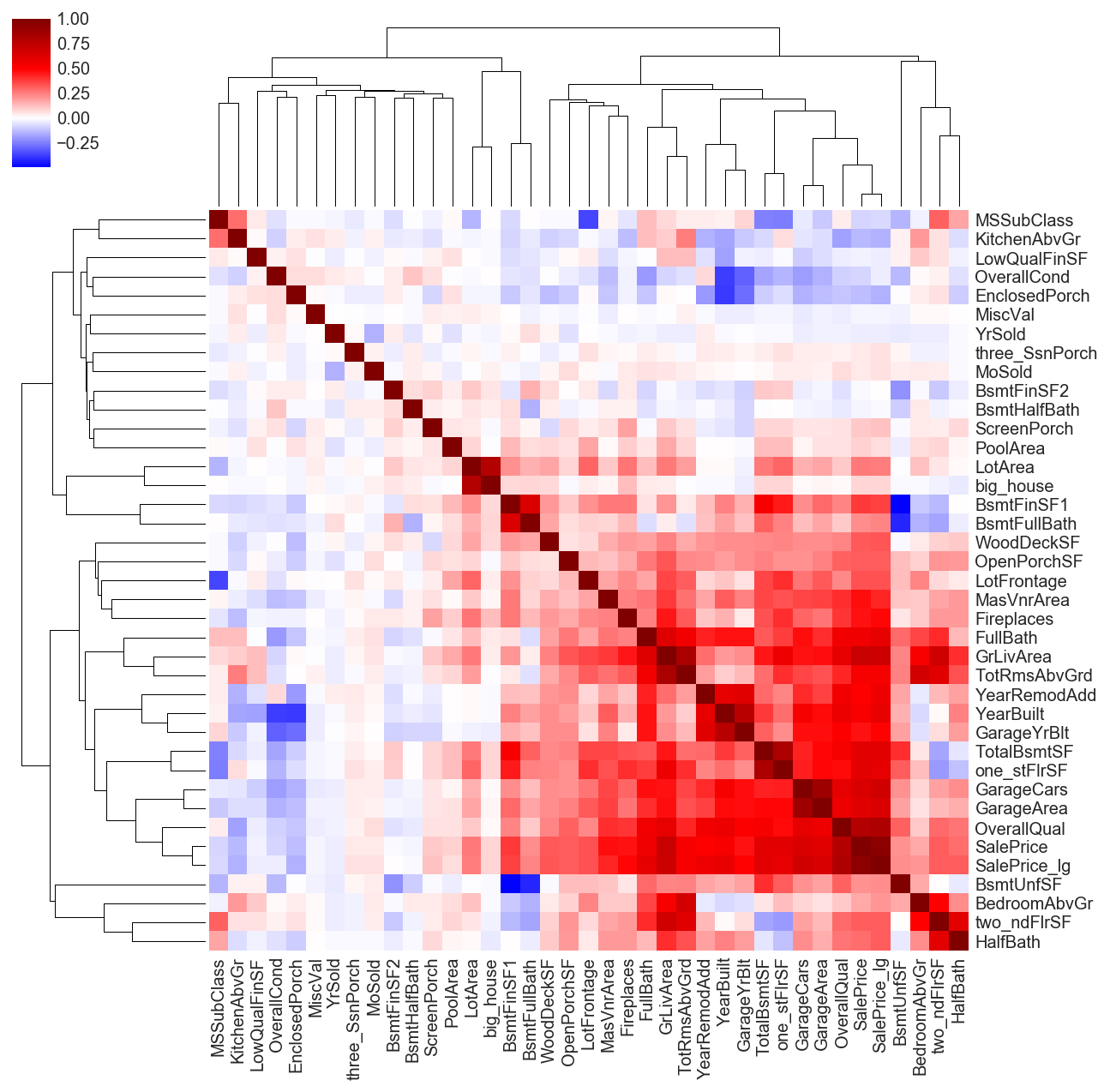

1.10 Explore relationships between SalePrice and other variables

plt.figure(figsize=(14,14))

sns.clustermap(house_new.corr(), cmap='seismic', center=0)

<seaborn.matrix.ClusterGrid at 0x111d4cfd0>

<matplotlib.figure.Figure at 0x111d4cb00>

abs(house_new.corr()['SalePrice']).sort_values(ascending=False).head(10)

SalePrice 1.000000

GrLivArea 0.708624

GarageCars 0.640409

GarageArea 0.623431

TotalBsmtSF 0.613581

one_stFlrSF 0.605852

FullBath 0.560664

TotRmsAbvGrd 0.533723

YearBuilt 0.522897

Fireplaces 0.466929

Name: SalePrice, dtype: float64

1.11 Model: train-test-split, scale, fit,predict using Lasso

target = 'SalePrice'

# create X, y

f = 'SalePrice ~ ' + ' + '.join([c for c in house_new.columns])+' - 1'.format()

f

y, X = patsy.dmatrices(f, data=house_new, return_type='dataframe')

# create train (before 2010) test (2010) split

X_train = X[X['YrSold'] != 2010].drop([target,'YrSold'],axis=1)

y_train = X[X['YrSold'] != 2010][target]

X_test = X[X['YrSold'] == 2010].drop([target,'YrSold'],axis=1)

y_test = X[X['YrSold'] == 2010][target]

# scale

ss = StandardScaler()

ss.fit(X_train)

Xs_train = ss.transform(X_train)

Xs_test = ss.transform(X_test)

# fit and score

model_lasso = LassoCV(cv=10)

model_lasso.fit(Xs_train, y_train)

score = model_lasso.score(Xs_test, y_test)

y_pred_lasso = model_lasso.predict(Xs_test)

coefs = model_lasso.coef_

print(score)

0.8605892616213779

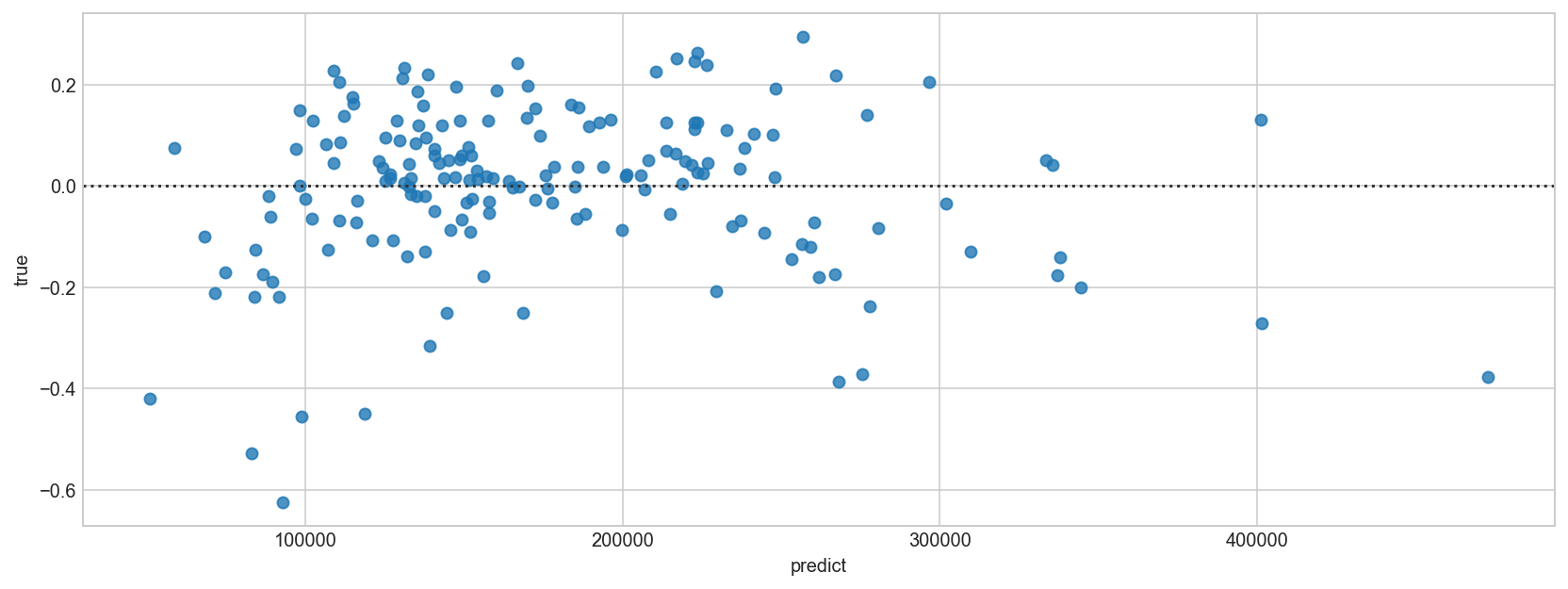

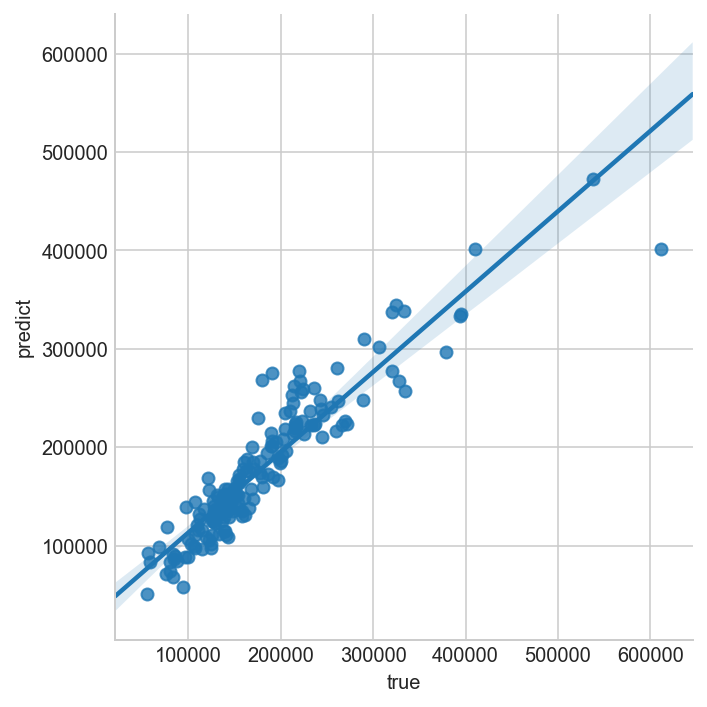

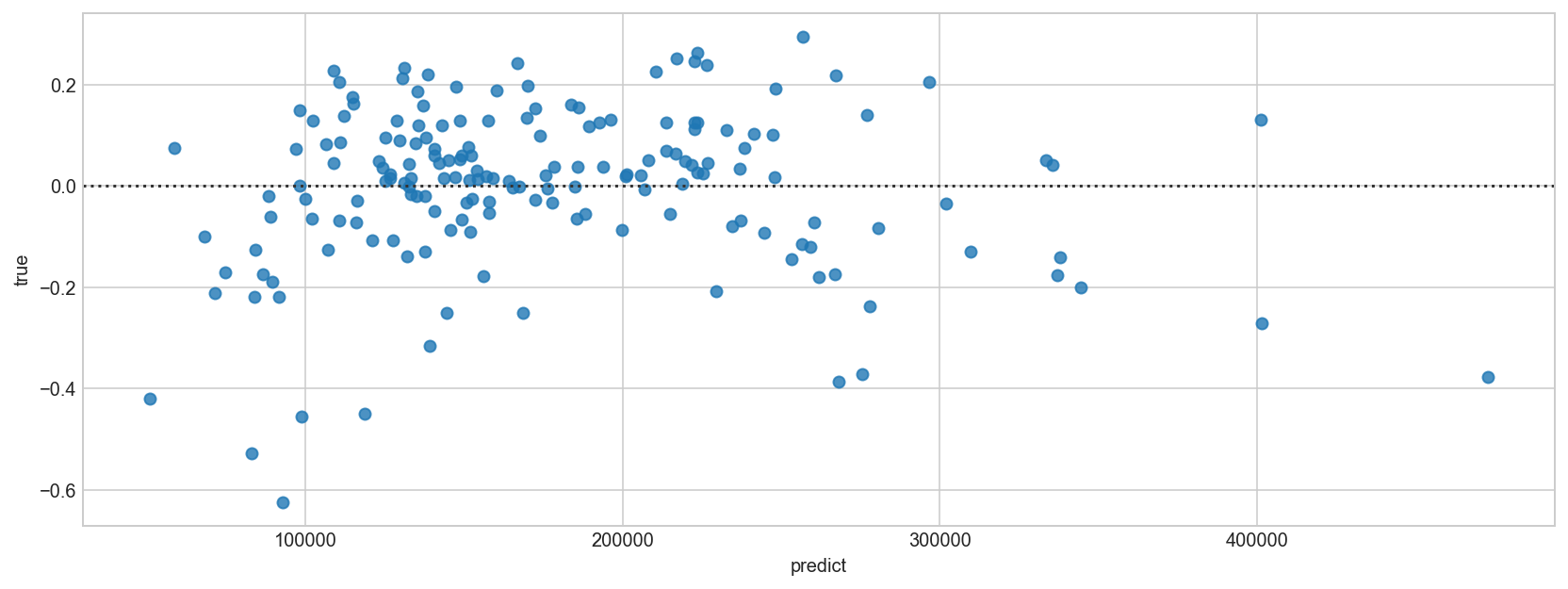

1.12 Plot residuals

residual_plot = pd.DataFrame(list(zip(y_pred_lasso,y_test)), columns=['predict','true'])

sns.lmplot(x= 'true', y='predict', data=residual_plot)

<seaborn.axisgrid.FacetGrid at 0x1172672b0>

residual_plot.head()

| predict | true | |

|---|---|---|

| 0 | 153968.943858 | 149000.0 |

| 1 | 151241.501279 | 154000.0 |

| 2 | 132550.282833 | 134800.0 |

| 3 | 301865.495610 | 306000.0 |

| 4 | 175591.208878 | 165500.0 |

plt.figure(figsize=(14,5))

sns.residplot(x= 'predict', y='true', data=residual_plot)

<matplotlib.axes._subplots.AxesSubplot at 0x1a1e748898>

As the residuals diverges as the prices increase, a linear regression is not appropriate. Therefore, we will use logarithmic model.

1.13 Applying log function on the SalePrice

# add the log of the saleprice as a new column and drop the original saleprice

house['SalePrice_lg'] = house['SalePrice'].map(log)

non_fixed.append('SalePrice')

house_new_2 = house.drop(non_fixed, axis=1)

#Evaluate the score of the 'SalePrice_lg

# create X, y

f = 'SalePrice_lg ~ ' + ' + '.join([c for c in house_new_2.columns])+' - 1'.format()

y, X_log = patsy.dmatrices(f, data=house_new_2, return_type='dataframe')

# create train (before 2010) test (2010) split

X_train_log = X_log[X_log['YrSold'] != 2010].drop(['SalePrice_lg','YrSold'],axis=1)

y_train_log = X_log[X_log['YrSold'] != 2010]['SalePrice_lg']

X_test_log = X_log[X_log['YrSold'] == 2010].drop(['SalePrice_lg','YrSold'],axis=1)

y_test_log = X_log[X_log['YrSold'] == 2010]['SalePrice_lg']

# scale

ss = StandardScaler()

ss.fit(X_train_log)

Xs_train_log = ss.transform(X_train_log)

Xs_test_log = ss.transform(X_test_log)

# fit and score

model_lasso_log = LassoCV(cv=10)

model_lasso_log.fit(Xs_train_log, y_train_log)

score_log = model_lasso_log.score(Xs_test_log, y_test_log)

y_pred_lasso_log = model_lasso.predict(Xs_test_log)

coefs_log = model_lasso_log.coef_

print(score_log)

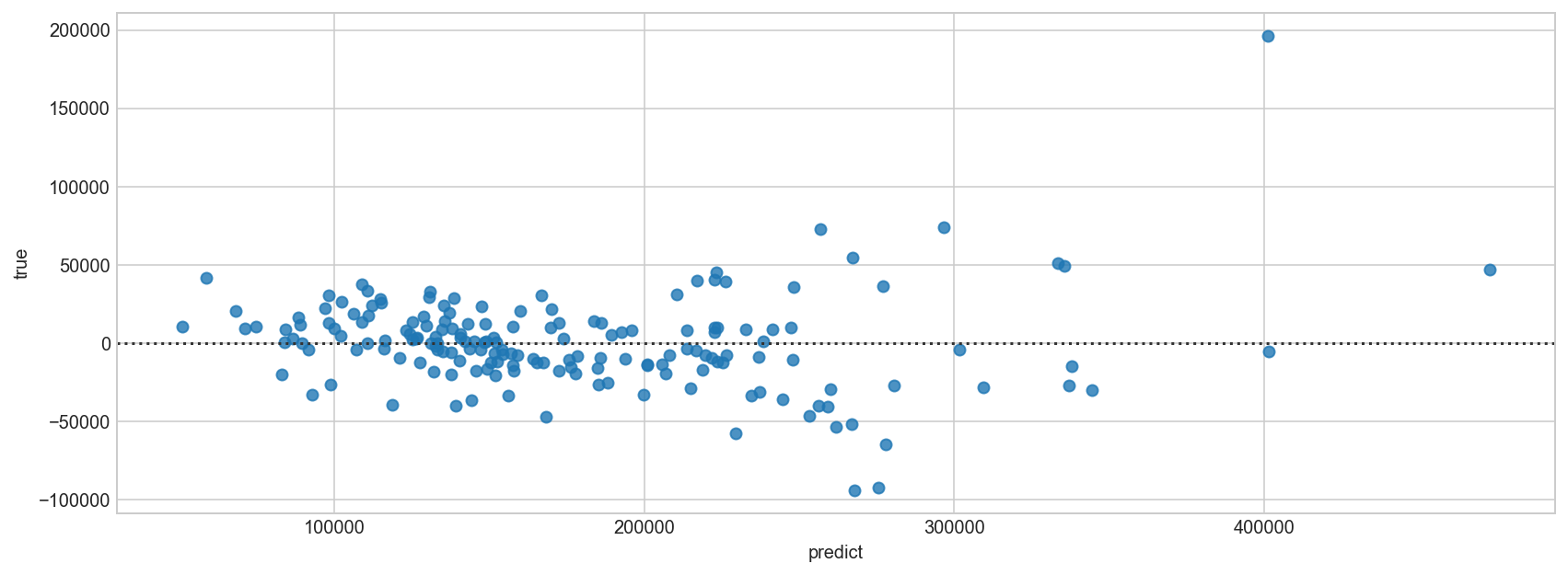

0.8973966456429032

residual_plot = pd.DataFrame(list(zip(y_pred_lasso_log, y_test_log)), columns=['predict','true'])

plt.figure(figsize=(14,5))

sns.residplot(x='predict', y='true', data=residual_plot)

<matplotlib.axes._subplots.AxesSubplot at 0x1a1e71c048>

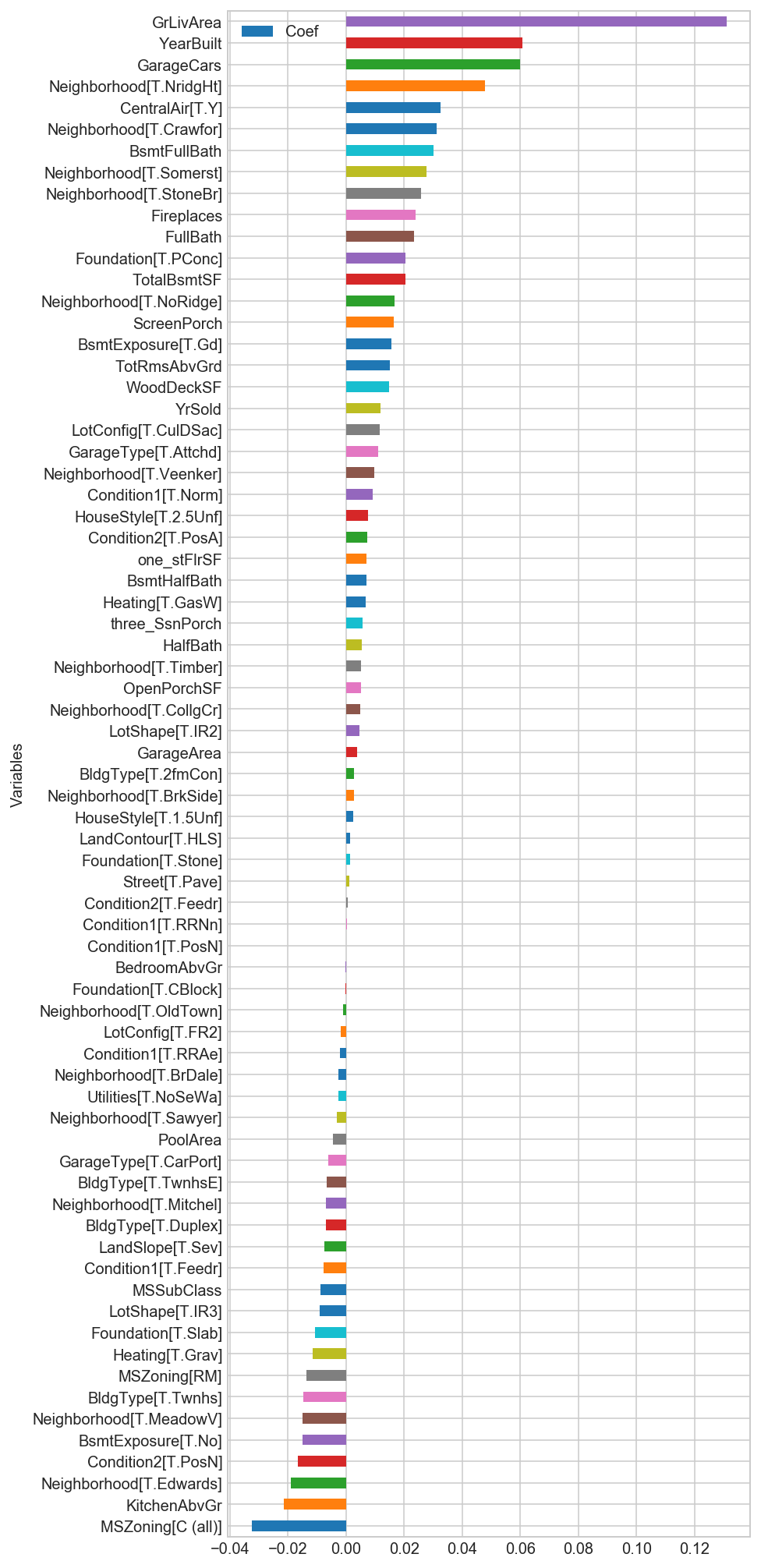

1.14 Identify top features of the housing dataset

lasso_coefs = pd.DataFrame(list(zip(X_log.columns, coefs_log)), columns=['Variables','Coef'])

lasso_coefs = lasso_coefs.loc[(lasso_coefs['Coef'] != 0)]

lasso_coefs.sort_values('Coef', ascending=True).plot(x='Variables',y='Coef',figsize=(6,18), kind='barh')

<matplotlib.axes._subplots.AxesSubplot at 0x1a1eceec50>

lasso_coefs['Coef_abs'] = lasso_coefs['Coef'].abs()

lasso_coefs['Coef_true'] = lasso_coefs['Coef'].map(lambda x: exp(x**2))

lasso_coefs.sort_values('Coef_abs', ascending=False).head(10)

| Variables | Coef | Coef_abs | Coef_true | |

|---|---|---|---|---|

| 95 | GrLivArea | 0.130983 | 0.130983 | 1.017305 |

| 91 | YearBuilt | 0.060790 | 0.060790 | 1.003702 |

| 105 | GarageCars | 0.059840 | 0.059840 | 1.003587 |

| 34 | Neighborhood[T.NridgHt] | 0.047916 | 0.047916 | 1.002299 |

| 82 | CentralAir[T.Y] | 0.032553 | 0.032553 | 1.001060 |

| 0 | MSZoning[C (all)] | -0.032476 | 0.032476 | 1.001055 |

| 24 | Neighborhood[T.Crawfor] | 0.031324 | 0.031324 | 1.000982 |

| 96 | BsmtFullBath | 0.030189 | 0.030189 | 1.000912 |

| 39 | Neighborhood[T.Somerst] | 0.027759 | 0.027759 | 1.000771 |

| 40 | Neighborhood[T.StoneBr] | 0.025956 | 0.025956 | 1.000674 |

Top estimators of price

- GrLivArea: Above grade (ground) living area square feet

- GarageCars: Size of garage in car capacity

- YearBuilt: Original construction date

- Neighbourhood: NridgHt

2. Determine any value of changeable property characteristics unexplained by the fixed ones.

Now that you have a model that estimates the price of a house based on its static characteristics, we can move forward with part 2 and 3 of the plan: what are the costs/benefits of quality, condition, and renovations?

There are two specific requirements for these estimates:

- The estimates of effects must be in terms of dollars added or subtracted from the house value.

- The effects must be on the variance in price remaining from the first model.

The residuals from the first model (training and testing) represent the variance in price unexplained by the fixed characteristics. Of that variance in price remaining, how much of it can be explained by the easy-to-change aspects of the property?

Your goals:

- Evaluate the effect in dollars of the renovate-able features.

- How would your company use this second model and its coefficients to determine whether they should buy a property or not? Explain how the company can use the two models you have built to determine if they can make money.

- Investigate how much of the variance in price remaining is explained by these features.

- Do you trust your model? Should it be used to evaluate which properties to buy and fix up?

2.1 Evaluate residual in the fixed variable model

residual_plot['predict_value'] = residual_plot['predict'].map(log)

residual_plot['true_value'] = residual_plot['true'].map(exp)

residual_plot['residual'] = abs(residual_plot['true_value'] - residual_plot['predict'])

residual_plot.head()

| predict | true | predict_value | true_value | residual | |

|---|---|---|---|---|---|

| 0 | 153968.943858 | 11.911702 | 11.944506 | 149000.0 | 4968.943858 |

| 1 | 151241.501279 | 11.944708 | 11.926633 | 154000.0 | 2758.498721 |

| 2 | 132550.282833 | 11.811547 | 11.794717 | 134800.0 | 2249.717167 |

| 3 | 301865.495610 | 12.631340 | 12.617737 | 306000.0 | 4134.504390 |

| 4 | 175591.208878 | 12.016726 | 12.075914 | 165500.0 | 10091.208878 |

fixed_var_residual_mean = residual_plot['residual'].mean()

fixed_var_residual_median = residual_plot['residual'].median()

# after sorting, we see that the greatest difference goes up to 18.6k!

residual_plot.sort_values('residual', ascending=False).head(10)

| predict | true | predict_value | true_value | residual | |

|---|---|---|---|---|---|

| 111 | 401369.988970 | 13.323927 | 12.902639 | 611657.0 | 210287.011030 |

| 9 | 267974.441612 | 12.100712 | 12.498647 | 180000.0 | 87974.441612 |

| 157 | 275614.504709 | 12.154779 | 12.526758 | 190000.0 | 85614.504709 |

| 147 | 296724.420258 | 12.843971 | 12.600559 | 378500.0 | 81775.579742 |

| 155 | 256726.473750 | 12.721886 | 12.455766 | 335000.0 | 78273.526250 |

| 94 | 472883.944257 | 13.195614 | 13.066605 | 538000.0 | 65116.055743 |

| 55 | 333476.387722 | 12.885202 | 12.717327 | 394432.0 | 60955.612278 |

| 127 | 267333.678918 | 12.700769 | 12.496253 | 328000.0 | 60666.321082 |

| 120 | 335672.669978 | 12.887127 | 12.723892 | 395192.0 | 59519.330022 |

| 27 | 277972.802795 | 12.301383 | 12.535279 | 220000.0 | 57972.802795 |

residual_plot['residual'].describe()

count 175.000000

mean 19578.169117

std 22733.579501

min 18.966037

25% 5978.414157

50% 12536.703886

75% 25344.490982

max 210287.011030

Name: residual, dtype: float64

2.2 EDA for model with changeable features

house.columns

Index(['MSSubClass', 'MSZoning', 'LotFrontage', 'LotArea', 'Street',

'LotShape', 'LandContour', 'Utilities', 'LotConfig', 'LandSlope',

'Neighborhood', 'Condition1', 'Condition2', 'BldgType', 'HouseStyle',

'OverallQual', 'OverallCond', 'YearBuilt', 'YearRemodAdd', 'RoofStyle',

'RoofMatl', 'Exterior1st', 'Exterior2nd', 'MasVnrType', 'MasVnrArea',

'ExterQual', 'ExterCond', 'Foundation', 'BsmtQual', 'BsmtCond',

'BsmtExposure', 'BsmtFinType1', 'BsmtFinSF1', 'BsmtFinType2',

'BsmtFinSF2', 'BsmtUnfSF', 'TotalBsmtSF', 'Heating', 'HeatingQC',

'CentralAir', 'Electrical', 'one_stFlrSF', 'two_ndFlrSF',

'LowQualFinSF', 'GrLivArea', 'BsmtFullBath', 'BsmtHalfBath', 'FullBath',

'HalfBath', 'BedroomAbvGr', 'KitchenAbvGr', 'KitchenQual',

'TotRmsAbvGrd', 'Functional', 'Fireplaces', 'GarageType', 'GarageYrBlt',

'GarageFinish', 'GarageCars', 'GarageArea', 'GarageQual', 'GarageCond',

'PavedDrive', 'WoodDeckSF', 'OpenPorchSF', 'EnclosedPorch',

'three_SsnPorch', 'ScreenPorch', 'PoolArea', 'MiscVal', 'MoSold',

'YrSold', 'SaleType', 'SaleCondition', 'SalePrice', 'big_house',

'SalePrice_lg'],

dtype='object')

plt.figure(figsize=(12,12))

sns.clustermap(house.corr(), cmap='seismic', center=0)

<seaborn.matrix.ClusterGrid at 0x111835e48>

<matplotlib.figure.Figure at 0x111cb8048>

abs(house.corr()['SalePrice']).sort_values(ascending=False).head(10)

SalePrice 1.000000

SalePrice_lg 0.948374

OverallQual 0.790982

GrLivArea 0.708624

GarageCars 0.640409

GarageArea 0.623431

TotalBsmtSF 0.613581

one_stFlrSF 0.605852

FullBath 0.560664

TotRmsAbvGrd 0.533723

Name: SalePrice, dtype: float64

2.3 Evaluate residual

# find out the difference in predicted and actual values

residual_plot['predict_value'] = residual_plot['predict'].map(lambda x:log(x))

residual_plot['true_value'] = residual_plot['true'].map(lambda x: exp(x))

residual_plot['residual'] = abs(residual_plot['true_value'] - residual_plot['predict'])

residual_plot.head()

| predict | true | predict_value | true_value | residual | |

|---|---|---|---|---|---|

| 0 | 153968.943858 | 11.911702 | 11.944506 | 149000.0 | 4968.943858 |

| 1 | 151241.501279 | 11.944708 | 11.926633 | 154000.0 | 2758.498721 |

| 2 | 132550.282833 | 11.811547 | 11.794717 | 134800.0 | 2249.717167 |

| 3 | 301865.495610 | 12.631340 | 12.617737 | 306000.0 | 4134.504390 |

| 4 | 175591.208878 | 12.016726 | 12.075914 | 165500.0 | 10091.208878 |

# after sorting, we see that the greatest difference goes up to 18.6k!

residual_plot.sort_values('residual', ascending=False).head(10)

| predict | true | predict_value | true_value | residual | |

|---|---|---|---|---|---|

| 111 | 401369.988970 | 13.323927 | 12.902639 | 611657.0 | 210287.011030 |

| 9 | 267974.441612 | 12.100712 | 12.498647 | 180000.0 | 87974.441612 |

| 157 | 275614.504709 | 12.154779 | 12.526758 | 190000.0 | 85614.504709 |

| 147 | 296724.420258 | 12.843971 | 12.600559 | 378500.0 | 81775.579742 |

| 155 | 256726.473750 | 12.721886 | 12.455766 | 335000.0 | 78273.526250 |

| 94 | 472883.944257 | 13.195614 | 13.066605 | 538000.0 | 65116.055743 |

| 55 | 333476.387722 | 12.885202 | 12.717327 | 394432.0 | 60955.612278 |

| 127 | 267333.678918 | 12.700769 | 12.496253 | 328000.0 | 60666.321082 |

| 120 | 335672.669978 | 12.887127 | 12.723892 | 395192.0 | 59519.330022 |

| 27 | 277972.802795 | 12.301383 | 12.535279 | 220000.0 | 57972.802795 |

#append the columns with all with the log function

house['SalePrice_lg'] = house['SalePrice'].map(log)

house_all = [house, house['SalePrice_lg'] ]

all_var = pd.concat(house_all)

all_var_new = all_var.columns.drop([0])

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/pandas/core/indexes/api.py:87: RuntimeWarning: '<' not supported between instances of 'str' and 'int', sort order is undefined for incomparable objects

result = result.union(other)

#Evaluate the score of the 'SalePrice_lg with ALL the features

# create X, y

f = 'SalePrice_lg ~ ' + ' + '.join([c for c in all_var_new])+' - 1'.format()

y_all, X_log_all = patsy.dmatrices(f, data=all_var, return_type='dataframe')

# create train (before 2010) test (2010) split

X_train_log_all = X_log_all[X_log_all['YrSold'] != 2010].drop(['SalePrice_lg','YrSold'],axis=1)

y_train_log_all = X_log_all[X_log_all['YrSold'] != 2010]['SalePrice_lg']

X_test_log_all = X_log_all[X_log_all['YrSold'] == 2010].drop(['SalePrice_lg','YrSold'],axis=1)

y_test_log_all = X_log_all[X_log_all['YrSold'] == 2010]['SalePrice_lg']

# scale

ss = StandardScaler()

ss.fit(X_train_log_all)

Xs_train_log_all = ss.transform(X_train_log_all)

Xs_test_log_all = ss.transform(X_test_log_all)

# fit and score

model_lasso_log_all = LassoCV(cv=10)

model_lasso_log_all.fit(Xs_train_log_all, y_train_log_all)

score_log_all = model_lasso_log_all.score(Xs_test_log_all, y_test_log_all)

y_pred_lasso_log_all = model_lasso_log_all.predict(Xs_test_log_all)

coefs_log_all = model_lasso_log_all.coef_

print(score_log_all)

0.9610537276873103

residual_plot_all = pd.DataFrame(list(zip(y_pred_lasso_log_all, y_test_log_all)), columns=['predict','true'])

plt.figure(figsize=(14,5))

sns.residplot(x='predict', y='true', data=residual_plot)

<matplotlib.axes._subplots.AxesSubplot at 0x1117e2d30>

residual_plot_all['residual'] = abs(residual_plot_all['true'] - residual_plot_all['predict'])

residual_plot.head()

| predict | true | predict_value | true_value | residual | |

|---|---|---|---|---|---|

| 0 | 153968.943858 | 11.911702 | 11.944506 | 149000.0 | 4968.943858 |

| 1 | 151241.501279 | 11.944708 | 11.926633 | 154000.0 | 2758.498721 |

| 2 | 132550.282833 | 11.811547 | 11.794717 | 134800.0 | 2249.717167 |

| 3 | 301865.495610 | 12.631340 | 12.617737 | 306000.0 | 4134.504390 |

| 4 | 175591.208878 | 12.016726 | 12.075914 | 165500.0 | 10091.208878 |

all_var_residual_mean = residual_plot_all['residual'].mean()

all_var_residual_median = residual_plot_all['residual'].median()

residual_plot_all['residual'].describe()

count 175.000000

mean 0.054052

std 0.059223

min 0.000303

25% 0.016689

50% 0.036289

75% 0.064983

max 0.331339

Name: residual, dtype: float64

print('mean diff:')

print(fixed_var_residual_mean - all_var_residual_mean)

print('median diff:')

print(fixed_var_residual_median - all_var_residual_median)

mean diff:

19578.115064632624

median diff:

12536.667597680169

3. What property characteristics predict an “abnormal” sale?

The SaleCondition feature indicates the circumstances of the house sale. From the data file, we can see that the possibilities are:

Normal Normal Sale

Abnorml Abnormal Sale - trade, foreclosure, short sale

AdjLand Adjoining Land Purchase

Alloca Allocation - two linked properties with separate deeds, typically condo with a garage unit

Family Sale between family members

Partial Home was not completed when last assessed (associated with New Homes)

One of the executives at your company has an “in” with higher-ups at the major regional bank. His friends at the bank have made him a proposal: if he can reliably indicate what features, if any, predict “abnormal” sales (foreclosures, short sales, etc.), then in return the bank will give him first dibs on the pre-auction purchase of those properties (at a dirt-cheap price).

He has tasked you with determining (and adequately validating) which features of a property predict this type of sale.

Your task:

- Determine which features predict the

Abnormlcategory in theSaleConditionfeature.- Justify your results.

This is a challenging task that tests your ability to perform classification analysis in the face of severe class imbalance. You may find that simply running a classifier on the full dataset to predict the category ends up useless: when there is bad class imbalance classifiers often tend to simply guess the majority class.

It is up to you to determine how you will tackle this problem. I recommend doing some research to find out how others have dealt with the problem in the past. Make sure to justify your solution. Don’t worry about it being “the best” solution, but be rigorous.

Be sure to indicate which features are predictive (if any) and whether they are positive or negative predictors of abnormal sales.

3.1 Train-Test-Split

f = 'SaleCondition + YrSold ~ '+' + '.join([c for c in house.columns if c != 'SaleCondition'])+' - 1'

f

'SaleCondition + YrSold ~ MSSubClass + MSZoning + LotFrontage + LotArea + Street + LotShape + LandContour + Utilities + LotConfig + LandSlope + Neighborhood + Condition1 + Condition2 + BldgType + HouseStyle + OverallQual + OverallCond + YearBuilt + YearRemodAdd + RoofStyle + RoofMatl + Exterior1st + Exterior2nd + MasVnrType + MasVnrArea + ExterQual + ExterCond + Foundation + BsmtQual + BsmtCond + BsmtExposure + BsmtFinType1 + BsmtFinSF1 + BsmtFinType2 + BsmtFinSF2 + BsmtUnfSF + TotalBsmtSF + Heating + HeatingQC + CentralAir + Electrical + one_stFlrSF + two_ndFlrSF + LowQualFinSF + GrLivArea + BsmtFullBath + BsmtHalfBath + FullBath + HalfBath + BedroomAbvGr + KitchenAbvGr + KitchenQual + TotRmsAbvGrd + Functional + Fireplaces + GarageType + GarageYrBlt + GarageFinish + GarageCars + GarageArea + GarageQual + GarageCond + PavedDrive + WoodDeckSF + OpenPorchSF + EnclosedPorch + three_SsnPorch + ScreenPorch + PoolArea + MiscVal + MoSold + YrSold + SaleType + SalePrice + big_house + SalePrice_lg - 1'

y, X = patsy.dmatrices(f, data=house, return_type='dataframe')

# create train test split

X_train = X[X['YrSold'] != 2010].drop(['YrSold'],axis=1)

y_train = y[y['YrSold'] != 2010]['SaleCondition[Abnorml]']

X_test = X[X['YrSold'] == 2010].drop(['YrSold'],axis=1)

y_test = y[y['YrSold'] == 2010]['SaleCondition[Abnorml]']

# scale

ss = StandardScaler()

ss.fit(X_train)

Xs_train = ss.transform(X_train)

Xs_test = ss.transform(X_test)

3.2 Revise datasets by doing undersampling/oversampling/SMOTE/SMOTEENN

# undersampling

from imblearn.under_sampling import ClusterCentroids

cc = ClusterCentroids(random_state=0)

X_resampled_under, y_resampled_under = cc.fit_sample(Xs_train, y_train)

# oversampling

from imblearn.over_sampling import RandomOverSampler

ros = RandomOverSampler(random_state=0)

X_resampled_over, y_resampled_over = ros.fit_sample(Xs_train, y_train)

# SMOTE

from imblearn.over_sampling import SMOTE

X_resampled_smote, y_resampled_smote = SMOTE().fit_sample(Xs_train, y_train)

# SMOTEENN

from imblearn.combine import SMOTEENN

smote_enn = SMOTEENN(random_state=0)

X_resampled_smoteenn, y_resampled_smoteenn = smote_enn.fit_sample(Xs_train, y_train)

3.3 Logistic Regression with cross validation

from sklearn import metrics

from sklearn.cross_validation import cross_val_score

from sklearn.model_selection import cross_val_predict

from sklearn.metrics import f1_score, make_scorer

# empty list to hold all model scores

overall_scores = []

# make precision scorer

scorer = make_scorer(f1_score)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/cross_validation.py:41: DeprecationWarning: This module was deprecated in version 0.18 in favor of the model_selection module into which all the refactored classes and functions are moved. Also note that the interface of the new CV iterators are different from that of this module. This module will be removed in 0.20.

"This module will be removed in 0.20.", DeprecationWarning)

from sklearn.linear_model import LogisticRegressionCV

def model_lg(technique, X_resampled, y_resampled, Xs_test, y_test):

# define model and fit

lg = LogisticRegressionCV(Cs=50, cv=5, scoring=scorer, penalty='l2')

lg.fit(X_resampled, y_resampled)

# obtain mean of cross validation precision scores

avg_score = np.mean(list(lg.scores_.values()))

# obtain cross validation predictions to make classification report and confusion matrix

y_pred_lg = lg.predict(Xs_test)

# find coefs

coefs = pd.DataFrame(list(zip(X_train.columns, lg.coef_[0])), columns=['Variable','Coef'])

coefs = coefs.loc[(coefs['Coef'] != 0)]

coefs['Coef_abs'] = coefs['Coef'].abs()

top_3_coefs = coefs.sort_values('Coef_abs', ascending=False).head(3)

# print scores

print(technique)

print("-------------------------------------------------------------")

print(metrics.classification_report(y_test, y_pred_lg))

print(metrics.confusion_matrix(y_test, y_pred_lg))

print("Average f-1 score from CV:" )

print(avg_score)

print("top 3 coefficients: " )

print(top_3_coefs)

# append to overall scores

overall_scores.append((avg_score, "Logistic Regression - ", technique))

print('-----------------------------------------------------------------')

model_lg('undersampling', X_resampled_under, y_resampled_under, Xs_test, y_test)

model_lg('oversampling', X_resampled_over, y_resampled_over, Xs_test, y_test)

model_lg('SMOTE', X_resampled_smote, y_resampled_smote, Xs_test, y_test)

model_lg('SMOTEENN', X_resampled_smoteenn, y_resampled_smoteenn, Xs_test, y_test)

undersampling

-------------------------------------------------------------

precision recall f1-score support

0.0 1.00 0.40 0.57 164

1.0 0.10 1.00 0.18 11

avg / total 0.94 0.44 0.55 175

[[66 98]

[ 0 11]]

Average f-1 score from CV:

0.5118860339070415

top 3 coefficients:

Variable Coef Coef_abs

190 SaleType[T.Oth] 0.002958 0.002958

144 Heating[T.GasA] 0.002770 0.002770

194 LotArea -0.002634 0.002634

-----------------------------------------------------------------

oversampling

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.86 0.90 164

1.0 0.12 0.27 0.16 11

avg / total 0.89 0.82 0.85 175

[[141 23]

[ 8 3]]

Average f-1 score from CV:

0.8793876680774424

top 3 coefficients:

Variable Coef Coef_abs

192 MSSubClass 15.321701 15.321701

9 LandContour[T.HLS] -14.791268 14.791268

58 BldgType[T.2fmCon] -14.507107 14.507107

-----------------------------------------------------------------

SMOTE

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.87 0.91 164

1.0 0.12 0.27 0.17 11

avg / total 0.90 0.83 0.86 175

[[143 21]

[ 8 3]]

Average f-1 score from CV:

0.8769788528485988

top 3 coefficients:

Variable Coef Coef_abs

65 HouseStyle[T.2.5Unf] -17.920607 17.920607

58 BldgType[T.2fmCon] -16.473324 16.473324

9 LandContour[T.HLS] -16.438882 16.438882

-----------------------------------------------------------------

SMOTEENN

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.74 0.83 164

1.0 0.10 0.45 0.17 11

avg / total 0.90 0.72 0.79 175

[[121 43]

[ 6 5]]

Average f-1 score from CV:

0.9382978528695868

top 3 coefficients:

Variable Coef Coef_abs

229 SalePrice_lg -3.697549 3.697549

227 SalePrice -3.626274 3.626274

189 SaleType[T.New] -3.323360 3.323360

-----------------------------------------------------------------

3.3 Try decision tree classifier

from sklearn.tree import DecisionTreeClassifier

def model_tree(technique, X_resampled, y_resampled, Xs_test, y_test):

# run cross validation

tree = DecisionTreeClassifier()

scores = cross_val_score(tree, X_resampled, y_resampled, cv=5, scoring=scorer)

# obtain avg cross validated precision score

avg_score = scores.mean()

# run tree for predictions to make classification report and confusion matrix

tree.fit(X_resampled, y_resampled)

y_pred_tree = tree.predict(Xs_test)

# print scores

print(technique)

print('-------------------------------------------------------------')

print(metrics.classification_report(y_test, y_pred_tree))

print(metrics.confusion_matrix(y_test, y_pred_tree))

print('average f-1 score from CV: ')

print(avg_score)

# append to overall scores

overall_scores.append((avg_score, 'Decision Tree - ',technique))

model_tree('undersampling', X_resampled_under, y_resampled_under, Xs_test, y_test)

model_tree('oversampling', X_resampled_over, y_resampled_over, Xs_test, y_test)

model_tree('SMOTE', X_resampled_smote, y_resampled_smote, Xs_test, y_test)

model_tree('SMOTEENN', X_resampled_smoteenn, y_resampled_smoteenn, Xs_test, y_test)

undersampling

-------------------------------------------------------------

precision recall f1-score support

0.0 1.00 0.21 0.34 164

1.0 0.08 1.00 0.14 11

avg / total 0.94 0.26 0.33 175

[[ 34 130]

[ 0 11]]

average f-1 score from CV:

0.7654700854700854

oversampling

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.95 0.95 164

1.0 0.20 0.18 0.19 11

avg / total 0.90 0.90 0.90 175

[[156 8]

[ 9 2]]

average f-1 score from CV:

0.9583570927579039

SMOTE

-------------------------------------------------------------

precision recall f1-score support

0.0 0.94 0.92 0.93 164

1.0 0.07 0.09 0.08 11

avg / total 0.88 0.87 0.88 175

[[151 13]

[ 10 1]]

average f-1 score from CV:

0.9281815909722964

SMOTEENN

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.80 0.87 164

1.0 0.11 0.36 0.17 11

avg / total 0.90 0.78 0.83 175

[[132 32]

[ 7 4]]

average f-1 score from CV:

0.9338618029830025

3.4 Try KNN

from sklearn.neighbors import KNeighborsClassifier

def model_knn(technique, X_resampled, y_resampled, Xs_test, y_test):

cv_scores = []

# create list of odd numbers for cross-validation

neighbors = list(range(1,15,2))

for k in neighbors:

knn = KNeighborsClassifier(n_neighbors=k)

scores = cross_val_score(knn, X_resampled, y_resampled, cv=5, scoring=scorer)

cv_scores.append((scores.mean(), k))

#obtain best k and best mean cv f-beta score

best_k = max(cv_scores)[1]

avg_score = max(cv_scores)[0]

# run KNN on best_k for predictions to make classification report and confusion matrix

knn = KNeighborsClassifier(n_neighbors=best_k)

knn.fit(X_resampled, y_resampled)

y_pred_knn = knn.predict(Xs_test)

# print scores

print(technique)

print('n_neighbours =')

print(best_k)

print('-------------------------------------------------------------')

print(metrics.classification_report(y_test, y_pred_knn))

print(metrics.confusion_matrix(y_test, y_pred_knn))

print('average f-1 score from CV:')

print(avg_score)

# append to overall scores

overall_scores.append((avg_score, "KNN -", technique))

return avg_score

model_knn('undersampling', X_resampled_under, y_resampled_under, Xs_test, y_test)

model_knn('oversampling', X_resampled_over, y_resampled_over, Xs_test, y_test)

model_knn('SMOTE', X_resampled_smote, y_resampled_smote, Xs_test, y_test)

model_knn('SMOTEENN', X_resampled_smoteenn, y_resampled_smoteenn, Xs_test, y_test)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

/Users/hayatibintehamzah/anaconda2/envs/py36/lib/python3.6/site-packages/sklearn/metrics/classification.py:1135: UndefinedMetricWarning: F-score is ill-defined and being set to 0.0 due to no predicted samples.

'precision', 'predicted', average, warn_for)

undersampling

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.88 0.92 164

1.0 0.17 0.36 0.24 11

avg / total 0.90 0.85 0.87 175

[[145 19]

[ 7 4]]

average f-1 score from CV:

0.2628985507246377

oversampling

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.92 0.93 164

1.0 0.19 0.27 0.22 11

avg / total 0.90 0.88 0.89 175

[[151 13]

[ 8 3]]

average f-1 score from CV:

0.9541175022628945

SMOTE

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.94 0.79 0.86 164

1.0 0.08 0.27 0.12 11

avg / total 0.89 0.76 0.81 175

[[130 34]

[ 8 3]]

average f-1 score from CV:

0.9033518216530035

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

0.9807279567701113

list(reversed(sorted(overall_scores)))

[(0.9807279567701113, 'KNN -', 'SMOTEENN'),

(0.9583570927579039, 'Decision Tree - ', 'oversampling'),

(0.9541175022628945, 'KNN -', 'oversampling'),

(0.9382978528695868, 'Logistic Regression - ', 'SMOTEENN'),

(0.9338618029830025, 'Decision Tree - ', 'SMOTEENN'),

(0.9281815909722964, 'Decision Tree - ', 'SMOTE'),

(0.9033518216530035, 'KNN -', 'SMOTE'),

(0.8793876680774424, 'Logistic Regression - ', 'oversampling'),

(0.8769788528485988, 'Logistic Regression - ', 'SMOTE'),

(0.7654700854700854, 'Decision Tree - ', 'undersampling'),

(0.5118860339070415, 'Logistic Regression - ', 'undersampling'),

(0.2628985507246377, 'KNN -', 'undersampling')]

KNN with SMOTEENN gives the highest f-1 score, followed by Decision Tree and KNN with oversampling.

Logistic Regression with SMOTEENN gives the below top predictors. To test the importance of these predictors, we need to run KNN with SMOTEENN without them and assess the impact on the model performance.

# drop columns

impact_on_score = []

original_score = model_knn('SMOTEENN', X_resampled_smoteenn, y_resampled_smoteenn, Xs_test, y_test)

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

def drop_col(col):

X_train_drop_col = X_train.drop(col, axis=1)

X_test_drop_col = X_test.drop(col, axis=1)

# scale

ss = StandardScaler()

ss.fit(X_train_drop_col)

Xs_train_drop_col = ss.transform(X_train_drop_col)

Xs_test_drop_col = ss.transform(X_test_drop_col)

# resample SMOTEENN

X_resampled_smoteenn_drop_col, y_resampled_smoteenn_drop_col = smote_enn.fit_sample(

Xs_train_drop_col, y_train)

# run model

print(col , 'dropped')

print('------------')

new_score = model_knn('SMOTEENN', X_resampled_smoteenn_drop_col,

y_resampled_smoteenn_drop_col, Xs_test_drop_col, y_test)

impact = original_score - new_score

impact_on_score.append((impact, col))

for col in list(X_train.columns):

drop_col(col)

MSZoning[C (all)] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.94 0.57 0.71 164

1.0 0.07 0.45 0.12 11

avg / total 0.89 0.57 0.67 175

[[94 70]

[ 6 5]]

average f-1 score from CV:

0.9811269384486723

MSZoning[FV] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

MSZoning[RH] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.57 0.71 164

1.0 0.08 0.55 0.14 11

avg / total 0.89 0.57 0.68 175

[[94 70]

[ 5 6]]

average f-1 score from CV:

0.9811335195065155

MSZoning[RL] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.58 0.68 175

[[94 70]

[ 4 7]]

average f-1 score from CV:

0.9807361630079079

MSZoning[RM] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.58 0.68 175

[[94 70]

[ 4 7]]

average f-1 score from CV:

0.9811401209597689

Street[T.Pave] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

LotShape[T.IR2] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9815341363558903

LotShape[T.IR3] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807296020138458

LotShape[T.Reg] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.58 0.72 164

1.0 0.08 0.55 0.14 11

avg / total 0.90 0.58 0.68 175

[[95 69]

[ 5 6]]

average f-1 score from CV:

0.9803834187868767

LandContour[T.HLS] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.58 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.91 0.58 0.69 175

[[95 69]

[ 4 7]]

average f-1 score from CV:

0.9811402921824467

LandContour[T.Low] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

LandContour[T.Lvl] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9803322455152383

Utilities[T.NoSeWa] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

LotConfig[T.CulDSac] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.58 0.68 175

[[94 70]

[ 4 7]]

average f-1 score from CV:

0.9819615169333844

LotConfig[T.FR2] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807296020138458

LotConfig[T.FR3] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9811302188632206

LotConfig[T.Inside] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.59 0.73 164

1.0 0.08 0.55 0.14 11

avg / total 0.90 0.59 0.69 175

[[97 67]

[ 5 6]]

average f-1 score from CV:

0.9831580637631545

LandSlope[T.Mod] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9827708551336316

LandSlope[T.Sev] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9807195943862063

Neighborhood[T.Blueste] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

Neighborhood[T.BrDale] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9799299834221289

Neighborhood[T.BrkSide] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.58 0.68 175

[[94 70]

[ 4 7]]

average f-1 score from CV:

0.9787081843046497

Neighborhood[T.ClearCr] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807163139716577

Neighborhood[T.CollgCr] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.57 0.71 164

1.0 0.08 0.55 0.14 11

avg / total 0.89 0.57 0.68 175

[[94 70]

[ 5 6]]

average f-1 score from CV:

0.9815490052348915

Neighborhood[T.Crawfor] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.58 0.68 175

[[94 70]

[ 4 7]]

average f-1 score from CV:

0.9791352605152799

Neighborhood[T.Edwards] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9815843296071067

Neighborhood[T.Gilbert] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.59 0.72 164

1.0 0.08 0.55 0.14 11

avg / total 0.90 0.58 0.69 175

[[96 68]

[ 5 6]]

average f-1 score from CV:

0.9791132330972907

Neighborhood[T.IDOTRR] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9811218564793155

Neighborhood[T.MeadowV] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9803359486249974

Neighborhood[T.Mitchel] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.58 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.91 0.58 0.69 175

[[95 69]

[ 4 7]]

average f-1 score from CV:

0.9791397803828457

Neighborhood[T.NAmes] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.97 0.57 0.72 164

1.0 0.10 0.73 0.18 11

avg / total 0.91 0.58 0.69 175

[[94 70]

[ 3 8]]

average f-1 score from CV:

0.9827658950530523

Neighborhood[T.NPkVill] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9799299834221289

Neighborhood[T.NWAmes] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.59 0.73 164

1.0 0.08 0.55 0.14 11

avg / total 0.90 0.59 0.69 175

[[97 67]

[ 5 6]]

average f-1 score from CV:

0.9835488392039189

Neighborhood[T.NoRidge] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.59 0.72 164

1.0 0.08 0.55 0.14 11

avg / total 0.90 0.58 0.69 175

[[96 68]

[ 5 6]]

average f-1 score from CV:

0.9795077912751008

Neighborhood[T.NridgHt] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9803273399207365

Neighborhood[T.OldTown] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.57 0.71 164

1.0 0.08 0.55 0.14 11

avg / total 0.89 0.57 0.68 175

[[94 70]

[ 5 6]]

average f-1 score from CV:

0.9799265568523323

Neighborhood[T.SWISU] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9803306002715036

Neighborhood[T.Sawyer] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.59 0.73 164

1.0 0.08 0.55 0.14 11

avg / total 0.90 0.59 0.69 175

[[97 67]

[ 5 6]]

average f-1 score from CV:

0.9811466932708296

Neighborhood[T.SawyerW] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.59 0.73 164

1.0 0.09 0.64 0.16 11

avg / total 0.91 0.59 0.69 175

[[96 68]

[ 4 7]]

average f-1 score from CV:

0.9803322455152383

Neighborhood[T.Somerst] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9803173322930967

Neighborhood[T.StoneBr] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.59 0.73 164

1.0 0.09 0.64 0.16 11

avg / total 0.91 0.59 0.69 175

[[96 68]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

Neighborhood[T.Timber] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.59 0.72 164

1.0 0.08 0.55 0.14 11

avg / total 0.90 0.58 0.69 175

[[96 68]

[ 5 6]]

average f-1 score from CV:

0.9835354798179541

Neighborhood[T.Veenker] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.980315697122283

Condition1[T.Feedr] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.58 0.68 175

[[94 70]

[ 4 7]]

average f-1 score from CV:

0.9811318641069553

Condition1[T.Norm] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.58 0.68 175

[[94 70]

[ 4 7]]

average f-1 score from CV:

0.981527555298047

Condition1[T.PosA] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.55 0.70 164

1.0 0.09 0.64 0.15 11

avg / total 0.90 0.55 0.66 175

[[90 74]

[ 4 7]]

average f-1 score from CV:

0.9803273399207365

Condition1[T.PosN] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.981128583692407

Condition1[T.RRAe] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.58 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.91 0.58 0.69 175

[[95 69]

[ 4 7]]

average f-1 score from CV:

0.9803306002715036

Condition1[T.RRAn] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807163139716577

Condition1[T.RRNe] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9803273399207365

Condition1[T.RRNn] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

Condition2[T.Feedr] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9803273399207365

Condition2[T.Norm] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

Condition2[T.PosA] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

Condition2[T.PosN] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

Condition2[T.RRAe] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.72 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.58 0.68 175

[[94 70]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

Condition2[T.RRAn] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

Condition2[T.RRNn] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9803273399207365

BldgType[T.2fmCon] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9803273399207365

BldgType[T.Duplex] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.57 0.71 164

1.0 0.08 0.55 0.14 11

avg / total 0.89 0.57 0.68 175

[[94 70]

[ 5 6]]

average f-1 score from CV:

0.9823253724999208

BldgType[T.Twnhs] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9799348590432156

BldgType[T.TwnhsE] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9811302188632206

HouseStyle[T.1.5Unf] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9795277413928009

HouseStyle[T.1Story] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.59 0.73 164

1.0 0.09 0.64 0.16 11

avg / total 0.91 0.59 0.69 175

[[96 68]

[ 4 7]]

average f-1 score from CV:

0.9819415359541172

HouseStyle[T.2.5Fin] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9803322455152383

HouseStyle[T.2.5Unf] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]

average f-1 score from CV:

0.9803273399207365

HouseStyle[T.2Story] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.59 0.73 164

1.0 0.09 0.64 0.16 11

avg / total 0.91 0.59 0.70 175

[[97 67]

[ 4 7]]

average f-1 score from CV:

0.9811384251010173

HouseStyle[T.SFoyer] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.55 0.70 164

1.0 0.09 0.64 0.15 11

avg / total 0.90 0.56 0.67 175

[[91 73]

[ 4 7]]

average f-1 score from CV:

0.9819450071532604

HouseStyle[T.SLvl] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.95 0.58 0.72 164

1.0 0.08 0.55 0.14 11

avg / total 0.90 0.58 0.68 175

[[95 69]

[ 5 6]]

average f-1 score from CV:

0.9807228950295009

RoofStyle[T.Gable] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.97 0.57 0.72 164

1.0 0.10 0.73 0.18 11

avg / total 0.91 0.58 0.69 175

[[94 70]

[ 3 8]]

average f-1 score from CV:

0.9815728023306051

RoofStyle[T.Gambrel] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9815292005417817

RoofStyle[T.Hip] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.97 0.57 0.72 164

1.0 0.10 0.73 0.18 11

avg / total 0.91 0.58 0.68 175

[[93 71]

[ 3 8]]

average f-1 score from CV:

0.9815793031316451

RoofStyle[T.Mansard] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.56 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.67 175

[[92 72]

[ 4 7]]

average f-1 score from CV:

0.9807279567701113

RoofStyle[T.Shed] dropped

------------

SMOTEENN

n_neighbours =

1

-------------------------------------------------------------

precision recall f1-score support

0.0 0.96 0.57 0.71 164

1.0 0.09 0.64 0.16 11

avg / total 0.90 0.57 0.68 175

[[93 71]

[ 4 7]]